Sensory Perception lab

Listening to auditory cortex

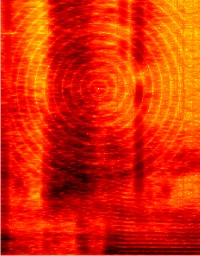

Our research seeks to unravel the relationship between the patterns of neural activity in auditory cortex and our perception of the world around us. In order to hear a sound in our environment that sound must first be detected as a vibration by the ear. The cochlea encodes the auditory scene by decomposing the incoming sound into its constituent sound waves creating a representation of the sound frequencies present. Therefore the job of the brain is to take this frequency representation and from it appropriately group the frequency components that arose from single objects or events in order for that particular sound source to be reconised and localised in space. Al Bregman described this process as somewhat similar to asking someone determine what and where objects (boats, ducks etc) might be on a lake from monitoring the ripples in the water that were visible in two small channels dug to the side of that lake.

Our work asks how perceptual features of sounds, such as their pitch, or their location in space, are represented at the level of auditory cortex. By studying populations of nerve cells in different cortical fields we aim to understand how complex sound features are represented, and how these representations are maintained in the noisy listening conditions we face in our everyday listening conditions. We use a range of behavioural, anatomical, physiological and computational approaches to tackle these questions.

Auditory cortical neurons are not just influenced by the sounds encoded by the cochlea. Attentional state, behavioural context and even the presence of visual stimuli can modulate or drive activity. Therefore neurons in auditory cortex should not be seen simply as static filters tuned to detect particular acoustic features. Understanding how neural firing underpins sensory perception requires that we measure neural activity during active listening and likely requires that we also take into account interactions between different sensory systems. Therefore, our lab also considers how what we see influences what we hear; both by using psychophysics to better understand the perceptual consequences of combining auditory and visual signals and by using neurophysiological techniques to understand how, when, and why visual stimuli influence activity in auditory cortex.

Support

Our research is supported by:

Human Frontiers Science Program

UCL Grand Challanges